Neon is a true serverless database, with connection pooling and automatic scaling. To add to the serverless stack, we recently co-launched the Inngest integration with Neon, which enables you to leverage Neon’s Logical Replication to trigger Serverless functions (Vercel Functions, AWS Lambdas, Cloudflare Workers, and more) from database changes.

In this blog post, we discuss a few use cases unlocked with these new serverless triggers—from user onboarding to building AI workflows.

Use case #1: The quickest way to prototype AI workflows

Let’s jump right into the burning topic of AI workflows.

As AI features now combine more and more tools and also include some reasoning and planning, the initial development effort required to ship some AI keeps increasing. This is where building your AI workflows on top of your Neon database by leveraging serverless triggers comes in handy.

With a few lines of code, Inngest enables you to plug some AI workflows into your Neon database:

export const contactEnrichment = inngest.createFunction(

{

id: "Contact Enrichment Workflow",

},

{ event: "db/contacts.inserted" },

async ({ event, step }) => {

const { email, companyName } = event.data

// Step 1: Gather company information

const companyInfo = await step.run("gather-company-info", async () => {

const completion = await openai.chat.completions.create({

model: "gpt-4",

messages: [

{

role: "system",

content: `Find key business information about ${companyName}. Include: industry, approximate size, key products/services, and main competitors.`,

},

],

});

return completion.choices[0].message.content;

});

// Step 2: Enrich contact details

const enrichedContact = await step.run("enrich-contact", async () => {

// ...

});

// Step 3: Generate engagement suggestions

const engagementSuggestions = await step.run("generate-suggestions", async () => {

// ...

});

// Step 4: Save information

await step.run("save-information", async () => {

// ...

});

}

);Your AI workflows immediately benefit from Inngest Function’s features.

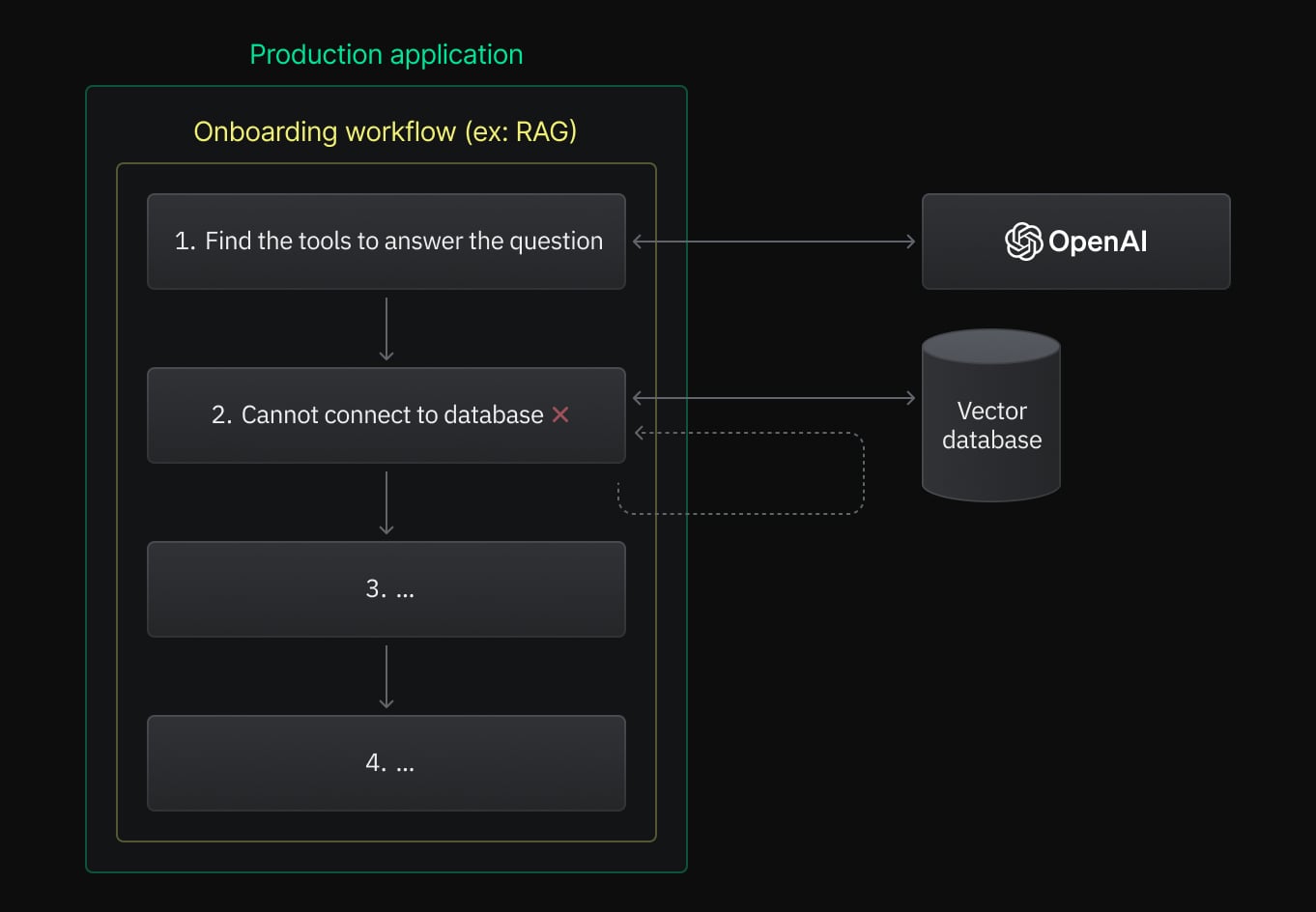

Fighting API flakiness and improving workflow reliability

Inngest Functions are built around the concept of step.run(), a practical way to divide your AI workflows into retriable and cached steps. Without boilerplate, your AI workflows automatically recover from external failures without rerunning successful steps, saving you some costly LLM calls.

Scaling with multiple tools and models

AI Workflows now mix multiple tools (third-party APIs) and models to get the best results, which leads to dealing with multiple rate-limiting policies. Adding concurrency or throttling policies to your AI Workflows to fit your user’s usage only requires a few configuration lines:

export const contactEnrichment = inngest.createFunction(

{

id: "Contact Enrichment Workflow",

throttling: { // 5 runs per minute

limit: 5,

interval: "1m",

},

concurrency: 5,

},

{ event: "db/contacts.inserted" },

async ({ event, step }) => {

const { email, companyName } = event.data

// ...

});Adding reasoning and “Human in the loop” to your AI workflows

Are you a developer evolving on the edge of AI Engineering and building some AI agents?

Then, you need a way for your AI Workflow to dynamically execute some steps and hand back the control to a human. Inngest Function’s waitForEvent() combined with the Inngest Workflow Kit, will give your AI Agent total freedom to plan and wait for human feedback.

Find a deployable open-source example of an AI-Agent built with Neon and Inngest in this article.

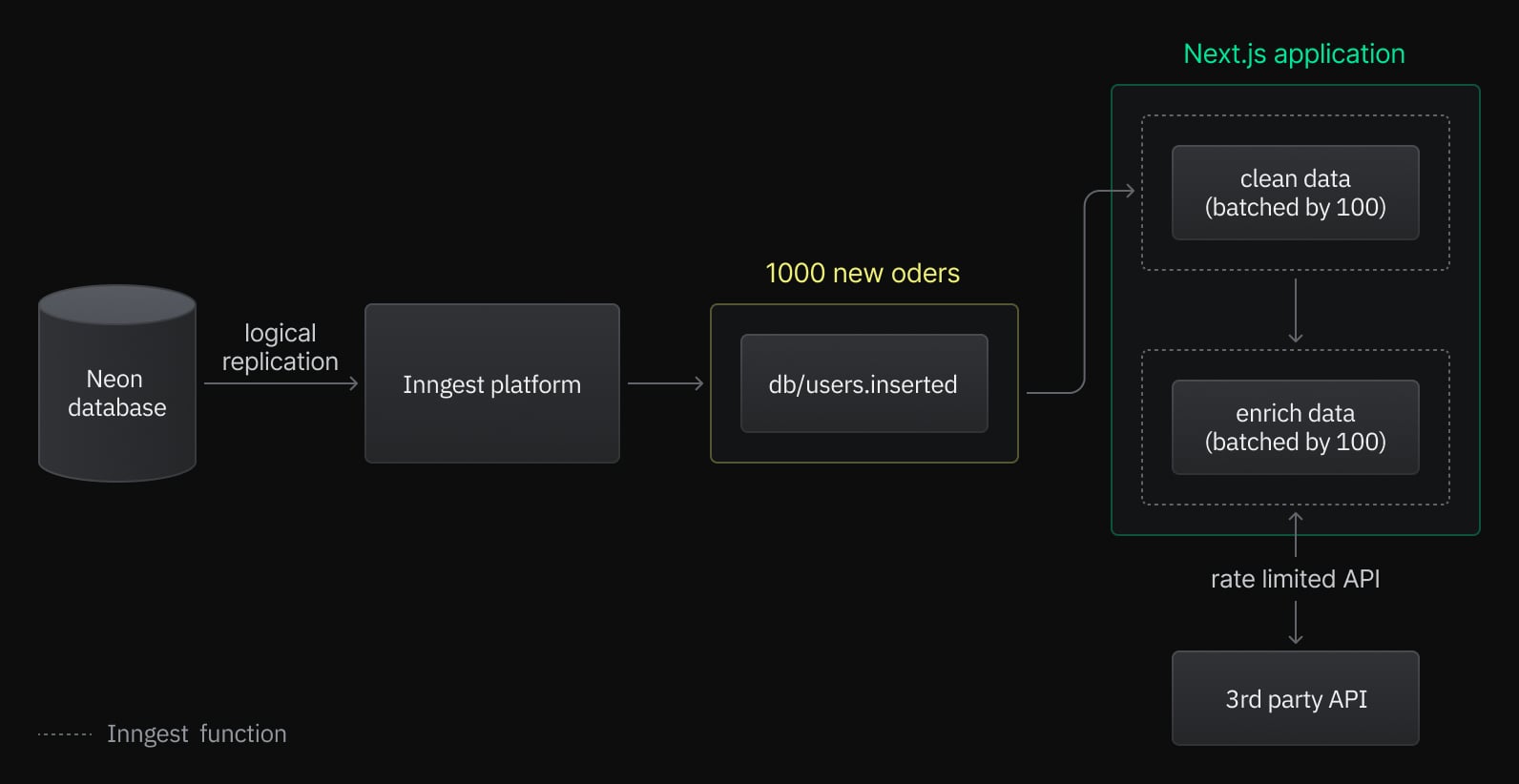

Use case #2: Stream your database into an ETL pipeline

Someone on Twitter once famously said:

ETL pipelines are pretty common when it comes to building SaaS applications, whether for importing user data, processing eCommerce orders, or enriching CRM contacts with AI.

ETL pipelines and other data-intensive features can now streamline their processing directly using database updates in combination with batching and throttling capabilities of Inngest:

Batching similar database updates together can also be done at a granular level by leveraging Batching with keys:

export const processOrders = inngest.createFunction(

{

id: "process-orders",

batchEvents: {

maxSize: 100,

timeout: "60s",

key: "event.data.shop_id",

},

},

{ event: "db/orders.inserted" },

async ({ events }) => {

// events.data contains from 1 to 100 records from the same `shop_id`

},

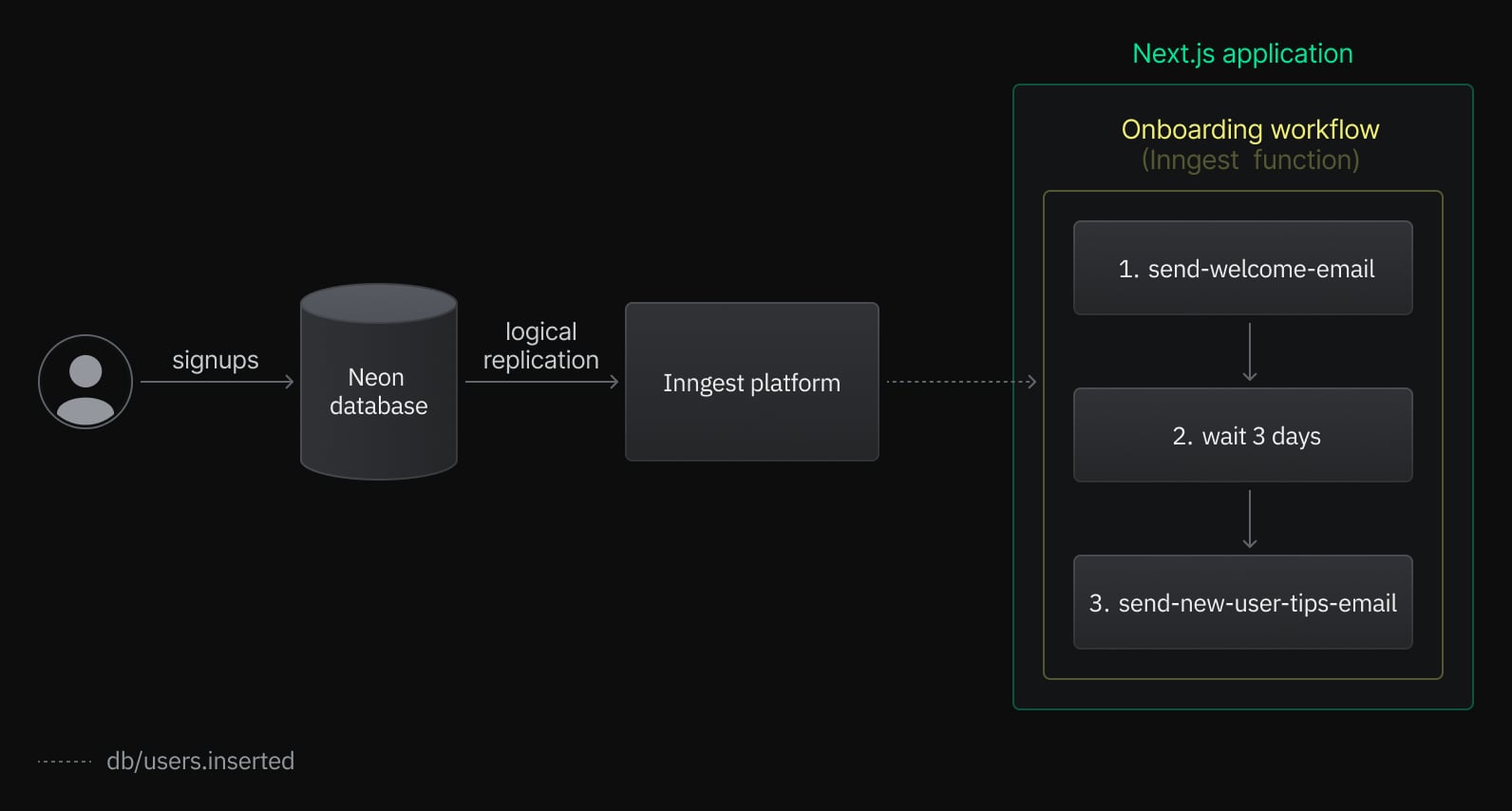

);Use case #3: Build user workflows that react to your database changes

Using Neon Server Function triggers is also a perfect fit to power regular SaaS features such as user onboarding workflows:

With a few lines of code, add the following Inngest Function to your Next.js application to power an email drip campaign onboarding your user with nice-looking Resend emails:

// inngest/functions/new-user.ts

import { inngest } from '../client'

export const newUser = inngest.createFunction(

{ id: "new-user" },

{ event: "db/users.inserted" },

async ({ event, step }) => {

const user = event.data.new;

await step.run("send-welcome-email", async () => {

// Send welcome email

await sendEmail({

template: "welcome",

to: user.email,

});

});

await step.sleep("wait-before-tips", "3d");

await step.run("send-new-user-tips-email", async () => {

// Follow up with some helpful tips

await sendEmail({

template: "new-user-tips",

to: user.email,

});

});

}

);Get started

You can start triggering serverless functions from your Neon database by installing the Inngest integrations in under a few minutes with its one-click installation.